I have been intrigued with Azure Event Hubs ever since it was launched, especially the throughput it was supposed to be able to handle as millions of events per second is ridiculously impressive.

I have always been curious as to how this output could be handled though, because no database out there could keep up with that, surely. Curiosity got the better of me and I wanted to create a test that would load up the Stream Analytics with 1/2 million events, and then see how long each Stream Output would take to write the events. Initially I have just done this test for Azure SQL Server and Azure Storage.

Firstly I create a Console Application that would load up 50 threads and each thread would process 1000 events:

static void Main()

{

Task[] tasks = new Task[50];

for (int i = 0; i < 50; i++)

{

tasks[i] = new Task(() => SendEvent(1000));

}

Parallel.ForEach(tasks, (t) => { t.Start(); });

Task.WaitAll(tasks);

}

public static void SendEvent(int eventCount)

{

EventHubSender client = EventHubSender.CreateFromConnectionString(ConnectionString);

for (int i = 0; i < eventCount; ++i)

{

string serialisedData = String.Format("{{ \"ClientId\": \"TestClient1\", \"UserId\": \"TestUser1_{0}_{1}\", \"TestData\": \"Action\" }}", DateTime.Now.ToString(), i);

client.Send(new EventData(Encoding.UTF8.GetBytes(serialisedData)));

}

}

Within Azure I created the following in the same region:

- Azure Storage account (Standard LRS)

- Two SQL Server Instances (5 DTU and 100 DTU to compare)

- Event Hub (10 throughput units)

- Stream Analytics Account (6 streaming Units)

- Azure VM (A3)

The query within Stream Analytics simply selected the input data and sent to the output:

SELECT *

INTO [BlobStorage]

FROM [EventHubTracking]

For each test I had only one output — either Blob or SQL, I didn’t want to do them at the same time in case the performance of each affected the other.

Within the Virtual Machine I created a batch file that would execute the the above code 10 times (to get 500,000). I tested each scenario 3 times to confirm and all times were around the same.

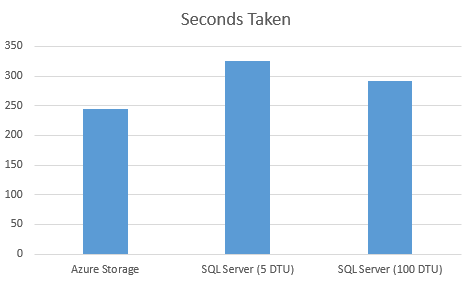

The speed for each was

- Azure Storage: 244 seconds

- SQL Server (100 DTU): 292 seconds

- SQL Server (5 DTU): 326 seconds

Interestingly Azure Storage was much quicker, and obviously cheaper too, so best option in a large scale environment is to write to Azure Storage and then batch process from there, dependent what you want to do.

What I also found interesting is that not one event was lost. There were exactly 500,000 each time. I had read that events may get lost, but that simply wasn’t the case.